How to unit test machine learning code?

Why are unit tests important? Why is testing important? How to do it for machine learning code? Those are questions I will answer.

I suggest that you grab a good coffee while you read what follows. If you write AI code at Neuraxio, or if you write AI code using software that Neuraxio distributed, this article is especially important for you to grasp what's going on with the testing and how it works.

The testing pyramid

Have you ever heard fo the testing pyramid? Martin Fowler has a nice article on this topic here. To summarize what it is: you should have LOTS OF small "unit" tests that are testing small components of your software, and then a FEW "integration" tests that are medium-sized (and will probably test your service application layer), and then VERY FEW "end-to-end" (E2E) tests that will test the whole thing at once (probably using your AI backend's REST API) with a real and complete use-case that does everything to see if everything works together. It makes a pyramid: unit tests at the bottom,

Why this different quantity of tests with these granularitues? So we have a pyramid of tests like this:

Note that the integration tests are sometimes also called acceptance tests. They may differ depending on where you work at, as different terminology is used. I personnaly prefer acceptation tests, so as to reffer to the business acceptation of a test case. Like if an acceptation test case is a business requirement written into code.

Suppose that in your daily work routine, you edit some code to either fix a bug, measure something in your code, or introduce new features. You will change something thinking that it helps. The following will eventually happen as you are not perfect and probably do errors and mistakes from time to time. How often have your code worked on the 1st try?

- Without tests at all: you will catch the bug 2 weeks later and probably have no clue where it is and how to fix it. The cost to fix this test will be 10x than if you knew it at the start when you coded it.

- With large & medium tests but no unit tests: you will know instantly upon doing the change that something is wrong. But you don't know for sure exactly where it is in your code. The cost to fix this test will be 3x what it'd be compared to if you knew where it was with unit tests.

- With small unit tests: not only you'll instantly that you have a bug upon doing the change and running the test, but chances are, if you have a good code coverage with your unit tests (say 80%), that you have a unit test testing the piece of software that you've just modified and you'll know instantly and exactly where you have a bug and why.

To sum up: unit testing gives you, and especially your team, some considerable speed. Rare are the programmers who like to be stuck just debugging software. Cut the debugging times by using unit tests, and not only will everyone be happy, but also everyone will code faster.

"Understanding code is by far the activity at which professional developers spend most of their time."

Unit tests

A unit test has 3 parts, they are called the AAA steps of a unit test:- Arrange: create variables or constants that will be used in the next Act phase. If the variables are created in many tests, they can be extracted at the top of the test file or elsewhere to limit code duplication (or the test can even be parametrized as in the second image example later on).

- Act: call your code to test using the variables or constants set up just above in the Arrange, and receive a result.

- Assert: verify that the result you obtained in the Act phase matches what you'd expect.

Example #1 of the AAA in a ML unit test:

[Click here to read whole original code file for the code above]

See how the test is first set-upped (arranged) at the beginning? The test above is even further setupped using an argument in the test function, meaning that this test can be ran again and again with different arguments to test using PyTest's parametrize. Here is a good example of a well-parametrized unit test that also makes use of the AAA.

Example #2 of the AAA in a ML unit test:

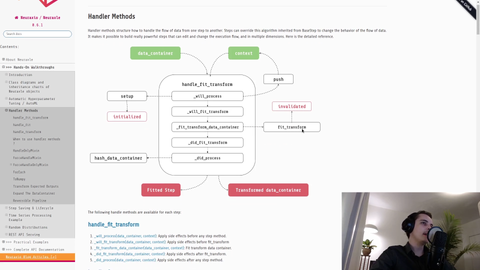

In the test above, written by Alexandre Brillant, we also see the AAA. At first, we create a ML pipeline, data inputs (X), and expected outputs (y). Then, we act: we call "fit_transform" on the pipeline to get a prediction result (Y). Then, we assert: we check that the prediction result matches what we expected (y==Y). That is a very stupid and simple test, although, it can catch many bugs.

Unit tests in ML rarely use lots of data. Most of the time, they use small hand-design data samples just to check if things compile or so.

You'd then use medium-sized fake (or sometimes real) datasets in acceptance tests (medium integration tests), and real data in the end-to-end tests.

Sometimes, a unit test will test more than one thing. For instance, you'll test two things, because in your "Arrange" part you'll use something else. Hopefully, this something else was already tested individually with another test. And sometimes you could use what is called "mocks" or "stubs" to ensure you don't use two things in the same test, although mocking is a bit more advanced and more used in Java (less in Python), you can read about mocks and stubs here. Personnally, I often prefer writing stubs rather than writing mocks, as stubs feels more straightforward to use across many different unit tests.

The TDD loop

It naturally emerges that someone who do unit tests will do this 3-steps loop:- RED: write a unit test that fails;

- GREEN: make the test pass by writing proper code;

- BLUE: refactor the code by cleaning a bit what you've just written before moving on.

Obviously, by doing the TDD loop, you'll often re-run your whole unit test suite to ensure you didn't break things around in the rest of the codebase nearby.

The ATDD loop

The ATDD loop is an improvement to the TDD loop. It is summarized as follow:ATDD: Write an acceptance test first, and then do many TDD loops to fulfill this acceptance test.

So the ATDD loop looks like this:

- Acceptance RED: write an acceptance test that fails;

- Acceptance GREEN: make the test pass by writing proper code;

- 1. Unit RED: write a unit test that fails;

2. Unit GREEN: make the test pass by writing proper code;

3. Unit BLUE: refactor the code by cleaning a bit what you've just written before moving on. - 1. Unit RED: write a unit test that fails;

2. Unit GREEN: make the test pass by writing proper code;

3. Unit BLUE: refactor the code by cleaning a bit what you've just written before moving on. - 1. Unit RED: write a unit test that fails;

2. Unit GREEN: make the test pass by writing proper code;

3. Unit BLUE: refactor the code by cleaning a bit what you've just written before moving on. - [Continue TDD loops as long as required to solve the acceptance test...]

- Acceptance BLUE: refactor the code by cleaning a bit what you've just written before moving on.

Other tests

Of course, there are more types of test. Some people do "border" tests, "database" tests, cloud "environment" tests, "uptime" tests (as in SLAs with uptime warranties), and more. But the 3 types of test presented above (E2E, acceptance/integration, unit) are the real deal for coding proper enterprise software."One difference between a smart programmer and a professional programmer is that the professional understands that clarity is king. Professionals use their powers for good and write code that others can understand."- Source: Robert C. Martin, Clean Code: A Handbook of Agile Software Craftsmanship